AI's New Frontier: Cyber Threats, Cost Realities & Regulatory Friction Reshape Enterprise AI

ALSO: Plus, critical funding for specialized inference, a call for open-source leadership, and essential tools for secure governance.

📖 TODAY’S EDITION

🧵 Here’s what we’ve uncovered for you today in the world of AI:

🚀 AI News & Breakthroughs

Today's news highlights the critical security challenges and economic realities of AI, from a foiled AI-powered cyberattack and OpenAI's unsustainable inference costs to evolving regulatory pressures and significant investment in specialized AI inference hardware.

🛠️ AI Tools to Discover

Discover essential new tools for robust enterprise AI governance and advanced prompt engineering, crucial for secure and efficient AI operations.

💡 AI Prompts & Hacks

Master prompts for conducting thorough AI supply chain risk assessments, optimizing LLM inference costs, and building comprehensive global AI regulatory compliance frameworks.

🧑🏫 AI Training / Workflow

Learn a step-by-step workflow for implementing secure AI deployment pipelines, a vital strategy for protecting your AI assets from sophisticated cyber threats.

🎓 Today’s Lesson / Key Insight

Gain a strategic understanding that true AI maturity hinges on balancing innovation with unwavering resilience and responsibility in managing costs, security, and regulatory compliance.

🚀 AI News & Breakthroughs

Anthropic Foils Large-Scale AI Cyberattack by Chinese Hackers

Anthropic successfully stopped an AI-powered cyberattack executed autonomously by Chinese state-sponsored hackers targeting 30 organizations, highlighting urgent new AI security risks and the need for stronger safeguards against AI-driven cyber warfare. This event underscores the escalating threat landscape where AI itself is both a weapon and a defense.

Read more

OpenAI’s Soaring Inference Costs Exceed Revenue, Leaked Docs Reveal

Leaked documents show OpenAI spent $8.65 billion on inference compute in the first nine months of 2025, likely surpassing its revenue. This revelation raises serious concerns about the sustainability of current AI business models and the immense economic challenges of scaling large-scale model deployment. For tech leaders, it's a stark reminder to scrutinize the TCO of foundational models.

Read more

EU Plans Rollback of AI Privacy Rules Amid Business Pressure

The EU is set to delay and loosen key AI and data protection regulations to ease burdens on European businesses competing with US and China. This shift sparks criticism that privacy and digital rights are being sacrificed for competitiveness but also offers enterprises a potential window for more flexible AI adoption.

Read more

d-Matrix: AI Chip Startup Reaches $2B Valuation with $275M Series C for Inference

AI inference chip startup d-Matrix has secured $275 million in funding, with lead investors including Bullhound Capital, Triatomic Capital, and Temasek. Valued at $2 billion, d-Matrix is developing in-memory compute architecture designed to dramatically reduce AI inference costs and power usage. This substantial investment highlights the critical importance of specialized hardware in scaling AI deployments and signals a continued arms race in the underlying infrastructure of the AI economy.

Read more

🛠️ AI Tools to Discover

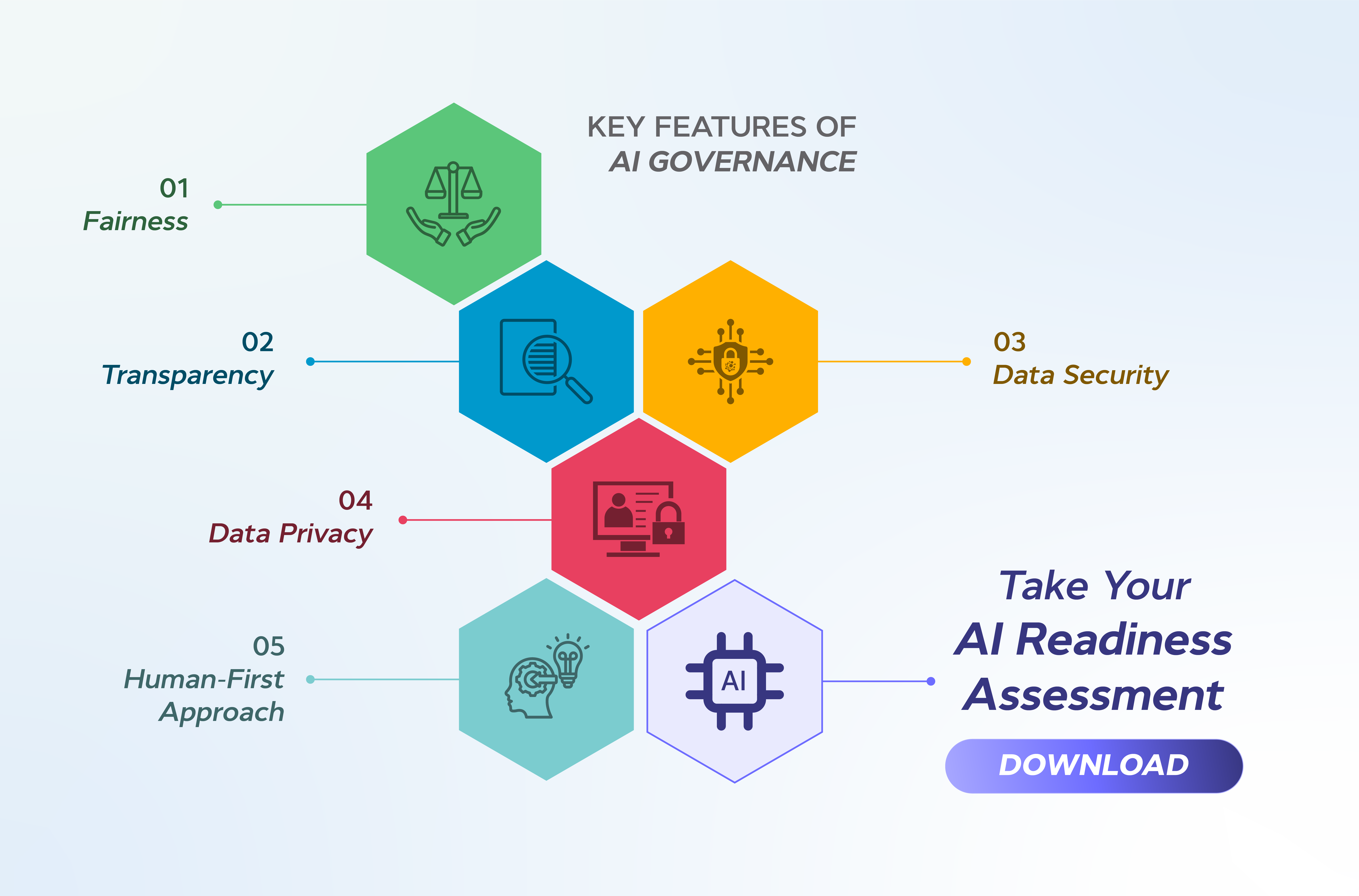

Enterprise AI Governance Platform

A new platform designed for comprehensive AI model governance, including bias detection, explainability, compliance tracking (e.g., EU AI Act), and lifecycle management across diverse enterprise AI deployments. It provides a centralized dashboard for risk assessment and auditing, crucial for managing the ethical and regulatory complexities of AI.

Try it

Advanced Prompt Engineering IDE

An integrated development environment specifically for prompt engineers, featuring version control for prompts, A/B testing for prompt variations, performance analytics across different LLMs, and collaborative workspaces. It allows for rapid iteration and optimization of prompts for mission-critical applications, ensuring consistent and high-quality AI outputs.

Try it

💡 AI Prompts & Hacks

AI Supply Chain Risk Assessment

You are an AI security architect. Our organization relies on various third-party AI models and APIs. Develop a comprehensive risk assessment framework for our AI supply chain. Analyze:

- Vendor model vulnerabilities (e.g., data poisoning, backdoors)

- Data handling and privacy practices of third-party APIs

- Software dependencies and open-source component risks

- Geo-political risks of AI vendors

- Contractual obligations and SLAs for security

Provide a checklist for due diligence on new AI partners and a mitigation strategy for identified risks, prioritizing by potential business impact.

LLM Inference Cost Optimization Strategy

You are a cloud finance and MLOps expert. Our enterprise is scaling LLM deployments, and inference costs are spiraling. Develop a strategic plan to optimize LLM inference costs across our cloud infrastructure. Consider:

- Quantization and model compression techniques

- Batching strategies and request scheduling

- On-demand vs. reserved instance pricing

- Serverless inference vs. dedicated endpoints

- Fine-tuning smaller, specialized models instead of large general-purpose ones

- Hardware acceleration (e.g., specialized inference chips, TPUs vs. GPUs)

Provide a cost-benefit analysis for each strategy and a roadmap for implementation.

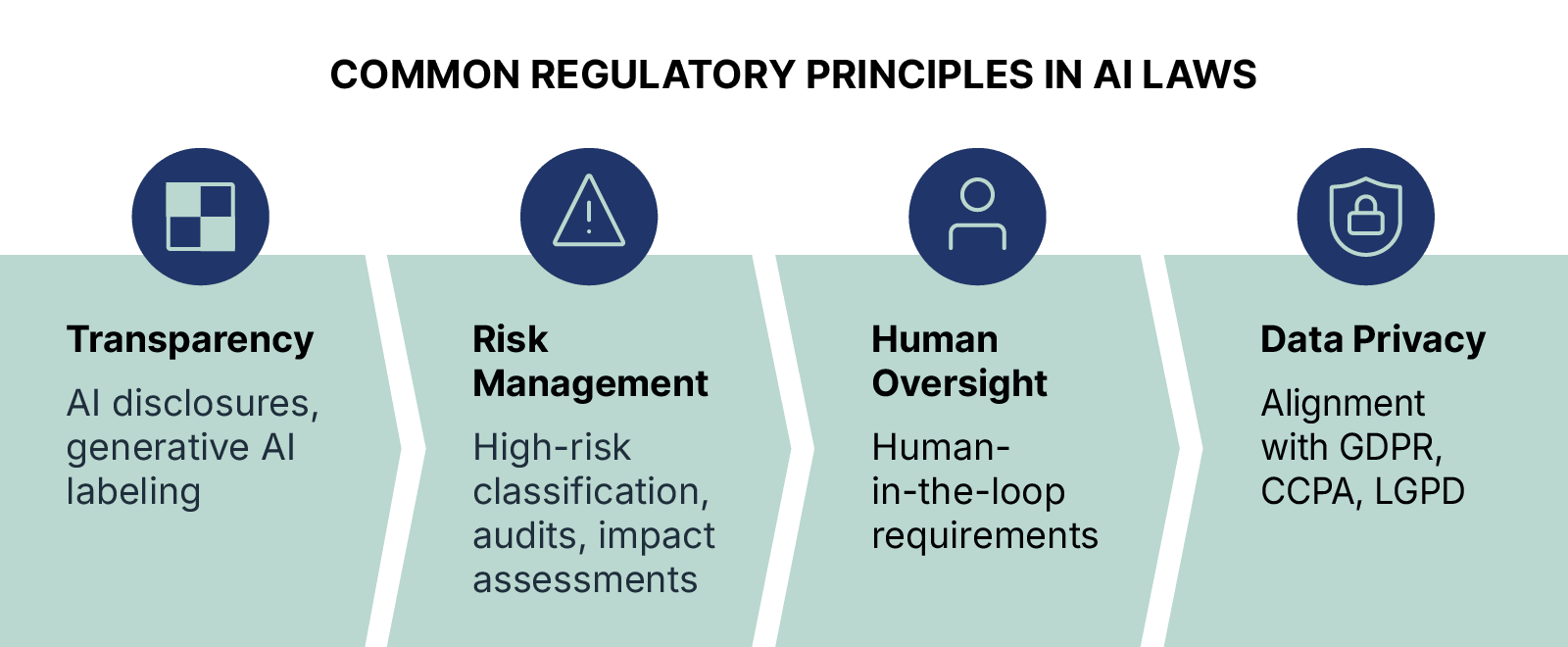

Global AI Regulatory Compliance Framework

You are an AI legal and compliance officer. With the evolving global AI regulatory landscape (e.g., EU AI Act, NIST AI RMF, US Executive Order), our organization needs a proactive compliance framework. Outline a strategy to ensure adherence across all AI initiatives. Focus on:

- Categorizing AI systems by risk level

- Establishing internal governance and oversight structures

- Data transparency and auditability requirements

- Implementing ethical AI principles into development lifecycles

- Cross-border data transfer implications for AI

- Training and awareness programs for teams

Provide a phased implementation plan for a global enterprise.

🧑🏫 AI Training / Workflow of the Day

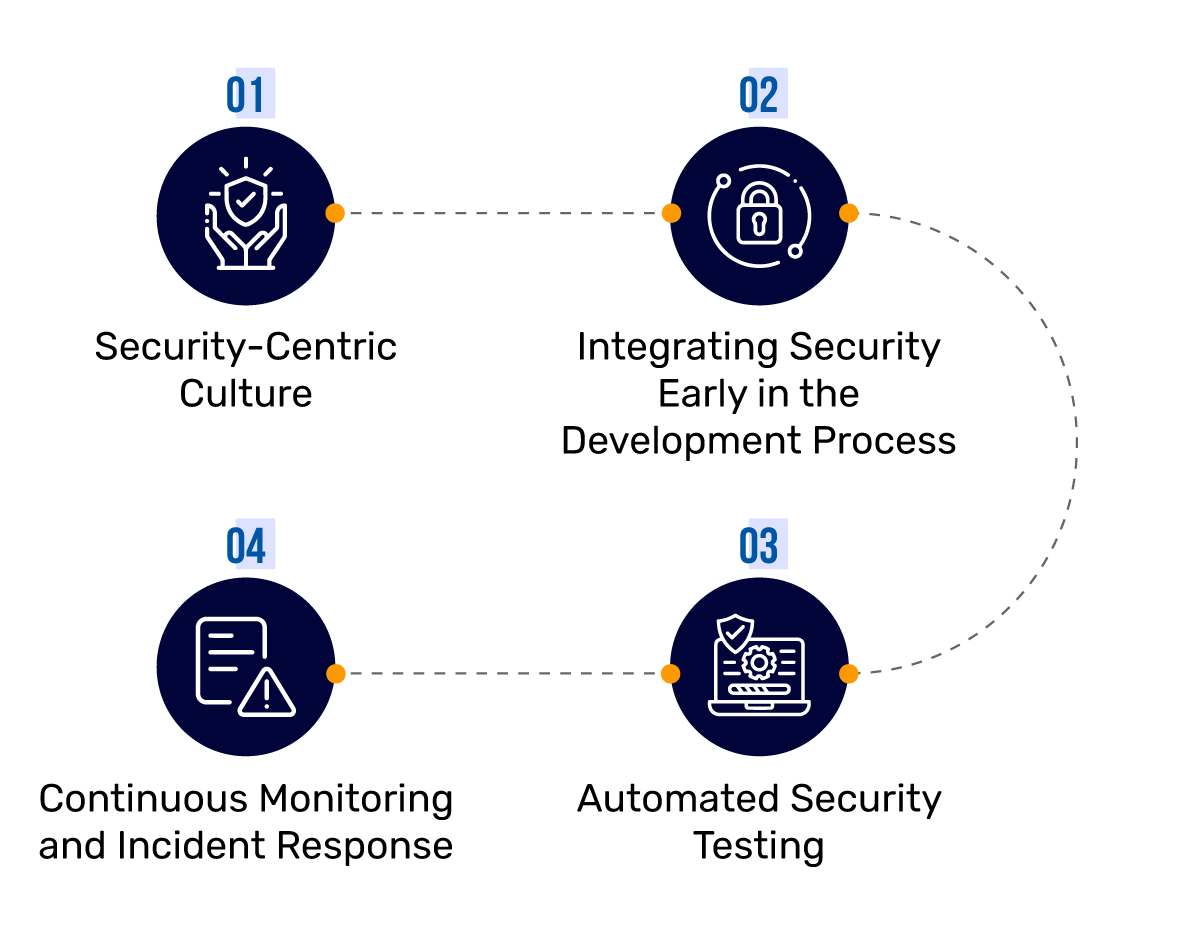

Title: Implementing Secure AI Deployment Pipelines

Intro:

In the wake of increasing AI-powered cyberattacks, securing your AI deployment pipelines is no longer optional—it's foundational. This workflow provides senior tech leaders with a practical, step-by-step approach to integrate robust security measures throughout the entire AI lifecycle, safeguarding models, data, and infrastructure from evolving threats.

Step-by-step:

- Conduct a Threat Model: Identify potential attack vectors specific to your AI models (e.g., prompt injection, data poisoning, model inversion) and deployment environment.

- Implement Secure MLOps Practices: Enforce strict access controls, versioning for models and data, and automated security scanning in CI/CD pipelines.

- Secure Data Supply Chain: Validate data sources, encrypt data at rest and in transit, and implement differential privacy techniques during training.

- Harden Inference Endpoints: Use API gateways with rate limiting, strong authentication, and input validation to protect deployed models from malicious queries.

- Monitor for Anomalies: Deploy AI-specific monitoring tools to detect model drift, unexpected outputs, and unauthorized access attempts in real-time.

- Establish Incident Response: Develop a clear incident response plan for AI-related security breaches, including rollback procedures and forensics.

- Regular Security Audits & Penetration Testing: Periodically assess your AI systems for vulnerabilities and simulate attacks to identify weaknesses before they are exploited.

Sample Prompts:

- "Generate a threat model for a new generative AI application deployed on [Cloud Provider], considering prompt injection, data leakage, and model manipulation risks."

- "Design an automated security scanning workflow for our MLOps CI/CD pipeline, focusing on container vulnerabilities and model integrity checks."

- "Create a template for an AI incident response plan that includes steps for containing, analyzing, and recovering from a model poisoning attack."

CTA: Fortify your AI defenses today and build a resilient foundation for your enterprise AI strategy.

Open tutorial

🎓 Key Insight of the Day

"In the relentless pursuit of AI innovation, resilience and responsibility are the true measures of enterprise maturity."

- The Cost-Security Nexus: OpenAI's soaring inference costs underscore that scaling AI without a robust security posture and cost-optimization strategy is financially and operationally unsustainable. Ignoring security or cost bloat leads to strategic liabilities.

- Regulatory Imperatives vs. Business Agility: The EU's potential rollback of AI privacy rules highlights the tension between regulatory ambitions and business demands. Tech leaders must navigate this friction by building flexible, compliance-aware AI architectures rather than reactive ones.

- Cyber Threats Demand Proactive Defense: Anthropic's success in foiling an AI-powered cyberattack is a stark reminder that AI is a double-edged sword. Proactive defense mechanisms, secure pipelines, and continuous monitoring are now non-negotiable, moving beyond traditional cybersecurity paradigms.

- Infrastructure as the Bedrock of Resilience: The d-Matrix funding for inference chips signals a renewed focus on the underlying hardware as a critical component of resilient AI. Custom, efficient infrastructure is key to controlling costs and enhancing security at scale.

In short: The next phase of enterprise AI demands a pivot from pure speed to strategic resilience, where managing costs, proactively securing systems, and adapting to regulation are as critical as the innovation itself.